Validating AI Models on African Datasets: Pitfalls and Practical Solutions

AI models trained or deployed in Africa often fail when evaluated against real-world needs because validation steps and datasets do not reflect Africa’s demographic, linguistic, clinical, and infrastructural diversity. This APA-style article for an African audience explains the key validation pitfalls (representativeness, label quality, distribution shift, lack of external validation, weak documentation, governance), provides practical, low-cost solutions (datasheets & model cards, stratified sampling, cross-site validation, federated learning, calibration & uncertainty quantification, human-in-the-loop), and gives a step-by-step validation roadmap founders, researchers, and health ministries can use. Recommendations are grounded in global best practice and African community efforts to build locally-relevant AI.

Abstract

As artificial intelligence (AI) applications expand across the African continent, rigorous validation on African datasets is essential to ensure safety, fairness, and effectiveness. This paper identifies common pitfalls when validating AI models using African data (data scarcity and fragmentation, non-representative samples, low-quality or inconsistent labels, distributional shift, lack of metadata and documentation, and weak governance). It then presents practical solutions and an actionable validation roadmap—including dataset documentation (datasheets), model reporting (model cards), sampling and external validation strategies, federated and privacy-preserving approaches, uncertainty quantification, and community-driven data initiatives. The recommendations are practical for researchers, startups, clinicians, and policymakers working to deploy robust AI in African contexts. arXiv+2arXiv+2

Introduction

AI systems are only as reliable as the data and validation processes used to build and test them. In Africa, datasets are often fragmented, under-represented, or undocumented; models validated only on non-African data or on small, biased samples will frequently underperform in real-world African settings. For high-stakes use cases—healthcare, agriculture, education, or governance—this mismatch can cause harm, unfair outcomes, and wasted investment. Global best practices (dataset and model documentation, external validation, and ethics guidance) provide foundations for robust validation; adapting these practices to African realities requires practical methods that acknowledge resource constraints and local diversity. arXiv+1

Key Pitfalls When Validating AI on African Data

-

Data scarcity and fragmentation

Many African domains lack large, well-curated datasets. Where data exist, they are spread across facilities, companies, and countries with different formats and standards, making aggregation and representative validation difficult. This scarcity undermines statistical power and external validity. arXiv -

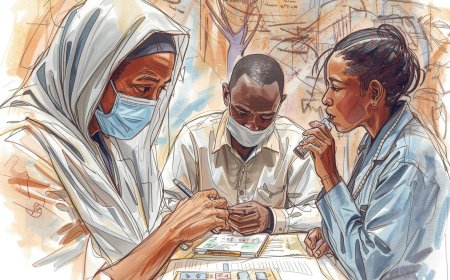

Non-representative sampling (population bias)

Datasets collected in urban referral hospitals, pilot projects, or specific language groups may not represent the age, ethnicity, socioeconomic status, geographic, or phenotypic diversity of target populations—leading to biased performance for under-represented groups. -

Label quality and inter-annotator variability

Labels in medical imaging, text classification, or speech tasks often depend on scarce expert annotators, causing inconsistent or noisy labels. In language and cultural tasks, annotation guidelines created outside local contexts lead to mislabeling. -

Distributional shift & domain mismatch

Models trained on non-African datasets (or limited African subsets) often fail when deployed in different hospitals, regions, or device types (e.g., different smartphone cameras, imaging machines, or recording conditions). Covariate shift, label shift, and concept drift are common. -

Poor metadata and absent documentation

Without documentation on data provenance, collection protocols, and population descriptors, downstream validators and auditors cannot judge whether test sets match intended deployment populations. -

Insufficient external and prospective validation

Performance reported on held-out folds of the same dataset overestimates real-world performance. External validation on independent cohorts (different sites, countries, device types) and prospective evaluations are frequently missing. -

Governance, consent, and privacy constraints

Legal and ethical constraints on data sharing, variability in national laws, and lack of clear governance frameworks complicate pooling data for validation and make reproducibility difficult. -

Ignoring sociocultural and language diversity

For NLP and speech models, the scarcity of labeled corpora in African languages and dialects leads to brittle systems; models validated only on English or colonial languages miss important local use-cases. Community-led initiatives are working to change this, but validation must explicitly include local languages. masakhane.io -

Evaluation metrics and calibration gaps

Standard aggregate metrics (accuracy, AUC) can mask subgroup failures. Models may also be poorly calibrated in new populations, making probability estimates misleading for clinical or operational decision-making.

Practical Solutions & Strategies

1. Start with sound dataset documentation (Datasheets)

Adopt dataset documentation practices (e.g., Datasheets for Datasets) to record motivation, population, collection methods, annotation process, known limitations, and recommended uses. This transparency helps validators decide whether a dataset is suitable for a particular deployment and enables safer model reuse. arXiv

Quick action: For every dataset create a short datasheet covering who was sampled, when & where data were collected, how labels were produced, preprocessing steps, and privacy constraints.

2. Use model reporting (Model Cards) for evaluable claims

Publish Model Cards for each released model summarizing evaluation metrics across subgroups, intended use cases, and known biases. Model cards force teams to test beyond a single aggregate metric and communicate limitations to users. arXiv

Quick action: Include subgroup performance (age, sex, region, device), calibration plots, and a clear “do not use” list.

3. Design validation to reflect deployment (stratified & cluster sampling)

When collecting test data, use stratified sampling across key axes (region, facility type, device, language). For clustered data (patients nested in hospitals), use cluster-aware cross-validation or hold entire sites out for true external testing.

Quick action: Reserve at least one wholly independent site (different region/country) for final evaluation.

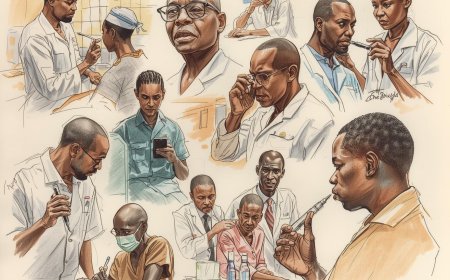

4. Prioritise external & prospective validation

External validation on independent cohorts (different hospitals, devices, or countries) should be mandatory for high-stakes models. Prospective validation—testing the model on incoming live data with pre-specified endpoints—gives the strongest evidence of real-world utility.

Quick action: Negotiate one or two partner sites early that agree to serve as external validators.

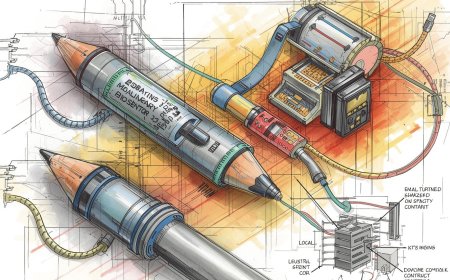

5. Quantify uncertainty & calibrate predictions

Report calibration (e.g., reliability diagrams, Brier score) and use uncertainty estimation (Monte Carlo dropout, ensemble methods, Bayesian methods) to flag low-confidence predictions for human review.

Quick action: Add a confidence threshold and routing policy (e.g., low-confidence → clinician) in deployment.

6. Use domain adaptation and transfer learning carefully

Transfer learning can bootstrap performance in low-data settings, but always fine-tune and validate on local data. When using models trained elsewhere, conduct ablation studies and domain-specific evaluation before deployment.

Quick action: Always retain a local validation set and report delta performance between source and target domains.

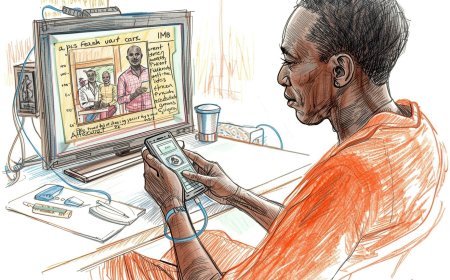

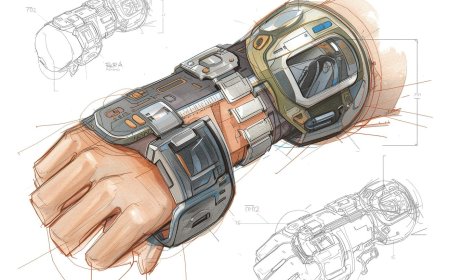

7. Leverage federated & privacy-preserving approaches for pooled validation

Federated learning and secure aggregation let institutions jointly validate models without moving raw data—useful where legal or logistical barriers prevent central pooling. However, federated setups require careful orchestration, reproducibility checks, and monitoring for client heterogeneity.

Quick action: Pilot a federated validation for a small set of partner clinics to test feasibility.

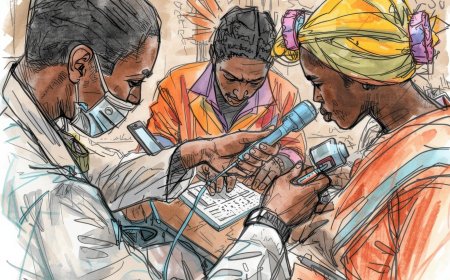

8. Improve label quality with active learning and consensus annotation

Use active learning to prioritize samples for expert annotation and consensus/majority voting to reduce noise. Invest in annotation guidelines and local annotator training to improve label validity.

Quick action: Run an inter-annotator agreement study and iterate annotation guidelines until acceptable κ (kappa) values are reached.

9. Evaluate fairness and subgroup performance

Report disaggregated metrics (sensitivity, specificity, PPV, NPV) for all meaningful subgroups. Use fairness metrics appropriate to the use case (equalized odds, calibration by group) and present trade-offs transparently.

Quick action: Add subgroup heatmaps to model cards and require an explicit mitigation plan for any subgroup with substantially worse performance.

10. Community-driven datasets and evaluation benchmarks

Support and use African-led efforts to create datasets and benchmarks (e.g., Masakhane for African languages); these initiatives improve representation and create shared, reproducible evaluation standards. masakhane.io

Quick action: Contribute anonymized, well-documented data back to community repositories under appropriate governance.

Validation Roadmap (Step-by-step)

-

Define intended use & deployment context (who, where, on which devices).

-

Assemble data inventory with datasheets for each source. arXiv

-

Create a validation plan specifying primary/secondary endpoints, subgroup analyses, external validation sites, and prospective pilot design.

-

Establish annotation protocol and measure inter-annotator agreement.

-

Train & tune with domain-aware methods (transfer learning + local fine-tuning).

-

Perform internal validation using stratified and cluster-aware methods.

-

Run external, blinded validation on at least one independent site.

-

Report model card including subgroup metrics and calibration. arXiv

-

Pilot deployment with monitoring (track performance drift, log cases routed to humans).

-

Continuous re-validation & governance—reassess performance after major data shifts, software updates, or geographic expansion.

Research Methods & Metrics to Use

-

Power/sample size: estimate sample sizes needed to detect performance differences in small subgroups; where impossible, report uncertainty openly.

-

Bootstrap and cross-site bootstrap: estimate confidence intervals for metrics and subgroup differences.

-

Calibration plots / Brier score: assess probabilistic outputs.

-

Decision curve analysis / net benefit: for clinical models, evaluate clinical usefulness, not only discrimination.

-

Fairness audits: test multiple fairness definitions and report trade-offs.

-

Prospective clinical endpoints: where applicable, measure clinical outcomes or process metrics (e.g., time to diagnosis, unnecessary referrals avoided).

Governance, Ethics & Policy (WHO guidance & local adaptation)

Ethics and governance frameworks stress human rights, transparency, and accountability in health AI; adopt WHO recommendations and map them to local law and practice. Ensure data subject consent, clarify data-sharing agreements, and build local review boards or ethics committees familiar with AI validation. Integrate documentation (datasheets and model cards) into procurement and regulatory submissions so buyers can judge applicability. World Health Organization+1

Examples & African Community Initiatives

Community efforts like Masakhane demonstrate continent-led dataset creation and evaluation for African languages; these projects provide models for other domains (health, agriculture) to follow, by combining local expertise, open benchmarks, and shared evaluation protocols. Such coalitions increase reproducibility and make external validation across diverse contexts feasible. masakhane.io

Limitations and Trade-offs

Many suggestions here require resources—time, expert annotators, partner sites—which can be scarce. Federated approaches reduce some barriers but add complexity. When resources limit ideal validation, be explicit: document limitations in datasheets and model cards; use conservative deployment policies (human oversight, narrow use cases) and seek staged scale-up tied to additional validation.

Conclusion

Validating AI models on African datasets must go beyond single-dataset, held-out splits. Robust validation combines careful documentation (datasheets), transparent model reporting (model cards), stratified and external validation, uncertainty quantification, and community-led dataset efforts. Following a structured roadmap and adopting WHO ethical guidance will reduce harms, improve fairness, and increase the likelihood that AI delivers real, sustainable benefits across Africa. arXiv+2arXiv+2

References

Gebru, T., Morgenstern, J., Vecchione, B., Wortman Vaughan, J., Wallach, H., Daumé III, H., & Crawford, K. (2018). Datasheets for datasets. arXiv. arXiv

Mitchell, M., Wu, S., Zaldivar, A., Barnes, P., Vasserman, L., Hutchinson, B., Spitzer, E., Raji, I. D., & Gebru, T. (2019). Model cards for model reporting. arXiv. arXiv

World Health Organization. (2021). Ethics and governance of artificial intelligence for health. WHO. World Health Organization

Norori, N., et al. (2021). Addressing bias in big data and AI for health care. BMJ / Lancet-type review. (Discusses bias sources and mitigation strategies relevant to health AI.) PMC

Masakhane. (n.d.). Masakhane — machine translation and NLP for African languages. Community initiative and publications. masakhane.io

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0