Liability and Clinical Governance for AI Recommendations in Africa: Who’s Accountable and How to Build Trustworthy Oversight

AI recommendations in clinical care raise novel liability and governance questions. This APA-style article for an African audience explains legal liability models, the roles and responsibilities of clinicians, vendors, and health facilities, and practical governance measures (procurement, clinical governance boards, model documentation, monitoring, incident management, insurance). It synthesises global guidance and African regulatory context and gives a 12-step implementation checklist hospitals, regulators, and vendors can use to deploy AI recommendations safely and accountably.

Abstract

The clinical deployment of AI systems that produce diagnostic or therapeutic recommendations raises difficult questions about legal liability, clinical responsibility, and governance—especially in African health systems with diverse regulatory environments. This article synthesizes legal concepts (negligence, product liability, vicarious liability, regulatory compliance), international guidance (WHO), and the continent’s evolving regulatory landscape to propose pragmatic clinical-governance structures that allocate responsibility, protect patients, and enable innovation. Core recommendations include: explicit procurement contracts that codify roles and indemnities; mandatory model documentation and local validation; clinical-governance committees with AI stewardship mandates; incident reporting and post-market surveillance; and risk-based insurance and regulatory oversight aligned with national laws. The approach balances clinician autonomy with vendor accountability and offers a 12-step operational checklist for hospitals, ministries, and vendors. World Health Organization+2PMC+2

Introduction

AI recommendations (e.g., risk scores, treatment suggestions, image interpretation prompts) can improve diagnostic speed and consistency—but they also create legal grey areas: who is liable if an AI suggestion leads to patient harm? Is liability the clinician’s, the hospital’s, or the AI developer’s? These questions have operational consequences: unclear accountability deters adoption, slows procurement, and can leave patients unprotected. International bodies (including the World Health Organization) have recommended governance, transparency, and monitoring for health AI; African policymakers and health systems must adapt those principles to local legal frameworks, capacity constraints, and health-system priorities. World Health Organization+1

Core liability concepts relevant to AI recommendations

Understanding liability requires mapping the legal doctrines likely to apply in clinical AI incidents:

-

Clinical negligence (malpractice) — Traditionally focuses on the clinician’s duty to exercise reasonable care. If a clinician uncritically follows an incorrect AI recommendation, negligence claims may hinge on whether following the AI was reasonable in the circumstances (e.g., did the clinician perform appropriate checks, and was the AI validated locally?). Conversely, if a clinician rejects a correct AI recommendation and harm occurs, liability may also attach. Courts will assess standard of care, local practice, and foreseeability. PMC+1

-

Product liability / defective design — Where AI is embedded in regulated medical software or devices, developers or manufacturers may face claims under product-safety principles if a defect in the algorithm causes harm (failure to warn, defective design, inadequate training/validation). Some jurisdictions are exploring whether existing product liability rules suffice or require adaptation for software that learns after deployment. PMC+1

-

Vicarious liability / institutional responsibility — Health facilities and employers may be vicariously liable for harms caused by staff acting in the course of employment, including following or deploying AI recommendations. Procurement decisions, lack of local validation, or absence of governance structures can increase institutional exposure. PMC

-

Contractual liability & indemnities — Contracts between health facilities and vendors typically allocate financial responsibility (indemnities, limits on liability). Well-drafted procurement contracts can specify roles (who updates models, who monitors performance), warranties (level of performance, compliance), and limits on damages—though enforceability varies by jurisdiction and public-sector procurement rules. PMC

-

Regulatory non-compliance — Violations of data-protection laws, medical-device regulations, or national AI policies can create administrative penalties or civil liability. National laws (e.g., data protection acts, medical device statutes) and regional strategies shape expectations for developers and health systems. DLA Piper Data Protection+1

(For summaries of these legal concepts in health-AI contexts, see reviews in legal and clinical journals.) PMC+1

Why Africa needs pragmatic governance that allocates liability clearly

Several features of African health systems heighten the need for explicit governance:

-

Fragmented regulatory frameworks and variable enforcement across countries make compliance complex; continental efforts (AU strategies) and WHO guidance provide harmonizing principles but do not replace national law. Clear local governance reduces legal uncertainty for hospitals and vendors. White & Case+1

-

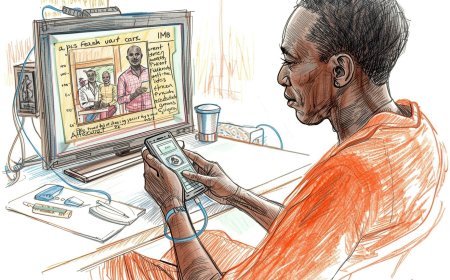

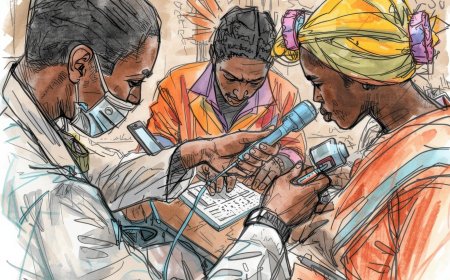

Resource constraints and task-shifting (wide use of clinical officers, nurses) shift how decisions are made; governance must accommodate different clinician skill mixes and define who may rely on AI recommendations. PMC

-

Limited precedent—few court decisions directly addressing AI in medicine—means that predictable contractual and governance practices are the best immediate risk-management tools. UM Law Scholarship Repo

Practical clinical-governance model: roles, processes, and tools

Below is an operational model hospitals and regulators in Africa can adopt. It balances clinician authority, vendor obligations, and system-level oversight.

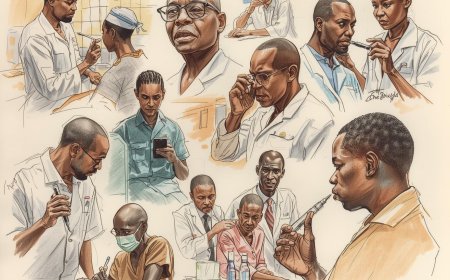

A. Roles & responsibilities (who does what)

-

Clinicians (treating professionals): retain final decision-making authority; must document clinical reasoning especially when deviating from AI recommendations; participate in local validation and governance activities.

-

Health facility / employer: implements procurement due diligence, local validation, training, monitoring, and incident reporting; holds primary institutional responsibility for safe deployment and local SOPs.

-

AI vendors / developers: provide model documentation (datasheets, model cards), validation evidence (source data, performance by subgroup), maintenance/patching plans, clear update procedures, and technical support; accept contractual obligations for defects or negligence where appropriate.

-

Regulators / ministries / professional bodies: set minimum data and safety standards, require registries or approvals for high-risk AI tools, and establish reporting requirements for adverse events.

-

Insurers / payers: align reimbursement and liability expectations (e.g., require proof of validation, indemnity coverage). World Health Organization+1

B. Key governance processes

-

Pre-procurement due diligence — vendors must supply datasheets/model cards, independent validation reports (including local or comparable-setting data), cybersecurity and privacy compliance evidence, and a service-level agreement (SLA) that defines responsibilities for updates and incident handling. Procurement teams should include clinicians, IT, legal, and finance. World Health Organization

-

Local validation & acceptance testing — before clinical use, run retrospective and (where possible) prospective validation in local data and workflows; document sensitivity/specificity, calibration, subgroup performance, and expected alert volumes. Hospitals should keep evidence of clinical acceptance and training completion. PMC

-

Clinical governance / AI stewardship committee — multidisciplinary committee (clinicians, hospital management, informatics, legal) approves deployments, sets thresholds for alert interruptiveness, reviews overrides and adverse events monthly, and authorises model updates. PMC

-

Operational SOPs and clinician training — define when clinicians may rely on AI, required documentation when following or overriding AI advice, escalation pathways, and typical workflows for triage/second opinion. Training records reduce legal exposure and improve safe use. World Health Organization

-

Incident reporting and post-market surveillance — integrate AI-specific adverse-event reporting into the hospital’s incident system; log model versions, inputs, and outputs for audited cases. Regulators should consider national registries of high-risk clinical AI tools. World Health Organization

-

Contractual allocation of risk — procurement contracts should include: warranties (accuracy thresholds), update & rollback clauses, indemnities, liability caps consistent with national procurement law, data-breach obligations, and insurance requirements (cyber and product liability). Legal teams must ensure clauses are enforceable locally. PMC

-

Insurance & financial risk transfer — facilities should require vendors to carry professional indemnity and product liability insurance at appropriate levels; facilities must also review their own insurance coverage for vicarious liability. Where local insurance markets are thin, pooled or reinsurance arrangements may be needed. PMC

-

Transparency & patient communication — patients should be informed when AI is used in care (consent where required), and mechanisms must exist to explain decisions and to enable complaints or redress. Documentation supports both ethical practice and legal defensibility. World Health Organization

Liability allocation patterns & sample contract clauses (practical drafting guidance)

Below are high-level approaches and sample language ideas (for legal teams to refine to local law):

-

Shared responsibility with limits (common model): vendor warrants model performance on defined datasets and will indemnify facility for damages arising from software defects; facility retains responsibility for clinical application and for clinician training.

Sample clause idea: “Vendor warrants that the Model achieves [X] sensitivity/specificity on validation dataset described in Appendix A. Vendor shall indemnify and hold harmless the Facility for direct damages arising from defective model outputs that directly result from Vendor’s failure to exercise reasonable care in development, maintenance, or distribution, subject to the monetary cap set in Section 9.” PMC -

Risk-based carveouts: higher risk functions (autonomous dosing recommendations) attract stricter warranties, regulatory pre-approval, and lower vendor liability caps. Lower-risk decision-support (information provision) has lighter contractual burdens. This aligns contractual risk to clinical risk. Nature

-

Obligation to support audits & logs: vendor provides auditable logs for at least X years and cooperates with incident investigations.

Sample clause: “Vendor shall maintain immutable logs of model inputs, outputs, and model version identifiers, and shall produce these logs within 30 days of a reasonable request by the Facility or regulator for investigation of a reported adverse event.” World Health Organization

Legal counsel must test enforceability in procurement regimes and adapt for public sector constraints.

Regulatory and policy landscape in Africa — what to expect

-

National laws vary. Some countries already have data-protection statutes (e.g., Kenya’s Data Protection Act) and others have medical device or software regulations; these laws shape obligations for consent, data transfer, and breach notification. Health ministries and regulators are beginning to adapt medical-device frameworks to include software-as-medical-device (SaMD) and AI. DLA Piper Data Protection+1

-

Regional harmonisation is emerging. The African Union and regional bodies are developing continental strategies and data-governance frameworks to harmonise AI governance—use these as reference points but verify national transposition and timelines. White & Case

-

WHO guidance is authoritative for health AI. WHO publications recommend transparency, safety monitoring, local validation, and governance mechanisms that many African regulators are adopting as good practice. Facilities should align procurement and governance with WHO recommendations. World Health Organization+1

Because the legal landscape is evolving rapidly, facilities and vendors should monitor national regulator updates and engage in policy consultations.

Case scenarios: who is likely liable?

-

Clinician follows AI suggestion without checking and harm occurs: potential clinical negligence claim—defence stronger if the AI was well-validated locally and governance/training existed; vendor exposure possible if the AI was defective and contract warranties/indemnities permit claims. PMC

-

AI bug produces systematically wrong recommendations due to a developer error: vendor product-liability risk, plus reputational and contractual exposure; facility liability if it failed to monitor or refused to implement an available vendor patch. Nature

-

Inadequate consent or data breach linked to AI use: regulatory penalties and civil liability under data-protection laws; both vendor and facility can be implicated depending on data controller/processor roles. DLA Piper Data Protection

Practical 12-Step Checklist (Operational)

For hospitals / health systems deploying AI recommendations:

-

Require vendor datasheets & model cards as precondition to procurement. World Health Organization

-

Conduct local retrospective validation; if feasible, run a prospective pilot. PMC

-

Establish an AI clinical-governance/ stewardship committee. PMC

-

Define SOPs for clinician reliance, documentation, and overrides. World Health Organization

-

Insist on auditable logs and versioning in contract. World Health Organization

-

Negotiate clear indemnity, warranty, and insurance clauses. PMC

-

Require vendor incident response and patching SLAs. PMC

-

Train clinicians and keep records of training. World Health Organization

-

Integrate AI incidents into existing clinical incident reporting. World Health Organization

-

Monitor post-market performance and subgroup outcomes. PMC

-

Communicate use of AI to patients; obtain consents where required. World Health Organization

-

Engage with national regulators and professional bodies on evolving standards. White & Case

Limitations, trade-offs & policy recommendations

-

Trade-offs: Strong vendor liability can discourage small innovators; weak liability leaves patients exposed. Risk-based regulation (higher scrutiny for higher clinical risk) and graduated contractual obligations help balance innovation and safety. Nature

-

Capacity & enforcement: Many countries will need capacity building (regulators, courts, insurers) to implement and adjudicate AI-related claims—regional cooperation and WHO support can accelerate this. World Health Organization+1

-

Insurance market limitations: Where insurance markets are shallow, governments and donors may need to facilitate pooled risk mechanisms or minimum insurance requirements for vendors operating in public procurement. PMC

Policy recommendations for governments and regulators:

-

Adopt risk-based classification for clinical AI and require registration/approval for high-risk tools. World Health Organization

-

Mandate model documentation (datasheets/model cards) and post-market surveillance reporting for clinical AI. World Health Organization

-

Clarify data controller/processor roles in national data-protection laws to assign liability for breaches. DLA Piper Data Protection

-

Support standard contract templates and procurement guidance for public facilities to allocate risk sensibly. PMC

Conclusion

AI recommendations offer promise for African health systems but also create new liability and governance challenges. Given current legal uncertainty and fragmented regulatory landscapes, the fastest path to safe, accountable adoption is to codify roles and responsibilities through (a) robust procurement contracts, (b) local validation and training, (c) clinical-governance/stewardship structures, (d) incident reporting and post-market surveillance, and (e) appropriate insurance arrangements. Aligning these operational measures with WHO guidance and regional strategies will protect patients, give clinicians confidence, and allow vendors to scale responsibly.

References

World Health Organization. (2021). Ethics and governance of artificial intelligence for health. WHO. World Health Organization

World Health Organization. (2024, Jan 18). WHO releases AI ethics and governance guidance for large multi-modal models. WHO news release. World Health Organization

Bottomley, D. (2023). Liability for harm caused by AI in healthcare: an overview of the core legal concepts. Journal / Legal Review. (Available: PMC). PMC

Townsend, B. A., et al. (2023). Mapping the regulatory landscape of AI in healthcare in Africa. Scoping Review / Journal. PMC

Eldakak, A. (2024). Civil liability for the actions of autonomous AI in healthcare. Article / Analysis. Nature

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0