Clinical Validation of Biosensors: Protocols That Investors Expect

Practical, Africa-focused playbook for clinical validation of wearable biosensors that convinces investors and regulators. Covers the evidence ladder (analytical → bench → prospective → real-world), primary/secondary endpoints, comparator/gold-standard choices, sample-size worked examples, statistical methods (Bland–Altman, sensitivity/specificity, AUROC), usability and equity testing, regulatory cues for Kenya/South Africa, and a copy-ready validation protocol outline investors expect. APA citations and live links included.

Why this matters (one-paragraph teaser)

Investors don’t fund pretty prototypes — they fund predictable outcomes. For biosensor startups that means a clear, staged clinical validation plan showing accuracy vs appropriate gold standards, reproducibility, safety, and real-world utility. This article tells you exactly what investors will read first in your data room, and exactly how to structure studies so the results survive investor grilling, regulator scrutiny, and the messy reality of African clinics and community settings. U.S. Food and Drug Administration+1

Quick, human anecdote (to keep you awake)

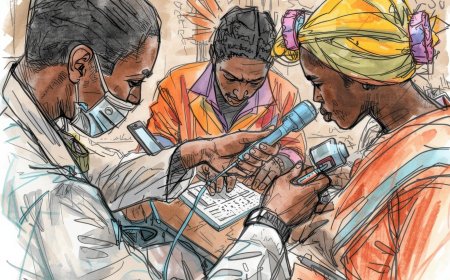

A founder once promised her LPs an “ECG-grade” wearable after a lab demo. Investors politely asked for the prospective data. The lab demo looked great — until field nurses showed how sweaty skin and a loose strap turned “ECG-grade” into “please-call-an-ambulance-grade.” The moral: build real-world robustness and show it in the protocol, not just in a powerpoint demo.

High-level investor checklist — what backers actually want to see (TL;DR)

-

Well-staged validation strategy: analytical → bench → retrospective/controlled clinical → prospective real-world.

-

Clear primary endpoint(s) tied to clinical use (e.g., AF detection sensitivity at patient-level; mean absolute error (MAE) for heart rate ≤5 bpm).

-

Appropriate comparator (gold standard) (12-lead ECG for rhythm; clinical-grade ABPM for BP; lab pulse oximeter for SpO₂).

-

Statistical plan: sample-size calculations, pre-specified analysis (Bland–Altman, sensitivity/specificity, AUROC, ICC). (Show step-by-step math for sample sizes.)

-

Usability & equity testing: device performance across skin tones, motion, sweat, and typical African climate/use patterns.

-

Regulatory route & QMS: ISO 13485, device classification and local regulator engagement (e.g., PPB/Kenya, SAHPRA/South Africa).

-

Data governance & cybersecurity evidence.

-

A realistic timeline & budget that links milestones to derisking events. ISO+1

The rest of this article gives you the “how” and the copy-paste protocol structure.

1) The evidence ladder (stage your studies — investors like stairs, not leaps)

-

Analytical validation (bench) — LOD, linearity, drift, repeatability, calibration stability. (In-lab; 2–8 weeks).

-

Technical/engineering verification — hardware/software stress tests, EMI/EMC and safety (IEC 60601 family), battery & thermal testing. (Engineering labs / third-party test houses). Simplexity Product Development+1

-

Controlled clinical accuracy (retrospective or case-control) — samples/pairs collected under ideal conditions vs gold standard (e.g., hospital ECG for arrhythmia). Fast, cheaper, used to estimate sensitivity/specificity. Follow STARD reporting guidance. equator-network.org

-

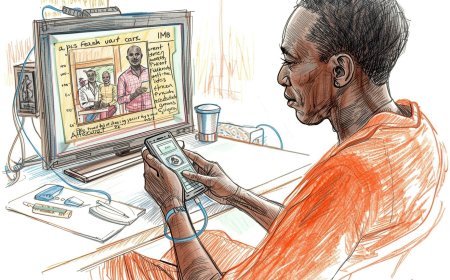

Prospective clinical validation (intended use population) — consecutive enrolment, pre-specified endpoints, real-world conditions (motion, heat, low battery, different skin tones). This is the backer-winning study. PMC

-

Real-world performance & utility (deployment study) — impact on decisions, clinical pathways, health economics (adoption, reduced readmissions, adherence). Investors want evidence this thing changes outcomes or reduces cost. Excedr

2) Primary & secondary endpoints — pick them like you mean business

Examples (pick what matches your device use case):

Primary endpoints (examples investors expect):

-

Arrhythmia detection: patient-level sensitivity & specificity for atrial fibrillation (AF) vs 12-lead ECG interpreted by cardiologist.

-

Heart rate monitoring: Mean absolute error (MAE) in beats/min vs clinical ECG across resting, walking, and post-exercise states. Target: MAE ≤5 bpm or pre-specified clinically meaningful bound.

-

Cuffless blood pressure: mean error and limits of agreement vs validated sphygmomanometer (ISO 81060 family guidance where applicable). PMC

Secondary endpoints:

-

Precision / repeatability (intra-class correlation, coefficient of variation).

-

Time-to-first-valid-reading (practicality).

-

Failure-rate (percent unusable readings).

-

Performance across subgroups: skin tone, BMI, age, motion levels, temperature/humidity buckets.

-

Battery life, connectivity success rate, data loss incidence.

-

Usability metrics (SUS score, task completion rate among nurses/CHWs).

3) Comparator (gold standard) guidance — pick the right yardstick

-

Rhythm/arrhythmia: 12-lead ECG interpreted by at least two cardiologists (adjudication if disagreement). Continuous Holter ± cardiologist adjudication for longer monitoring.

-

Heart rate: clinical-grade ECG (simultaneous, time-synchronised). Use beat-to-beat comparison if possible.

-

SpO₂: clinical-grade Masimo or hospital pulse oximeter with documented accuracy and calibration. Note: pulse oximeter bias across skin tones is a known issue — test explicitly. PMC

-

Blood pressure (cuffless): validated automated upper-arm sphygmomanometer per ISO 81060 or AAMI protocols; ambulatory BP monitoring (ABPM) for ambulatory endpoints. PMC

Always synchronise clocks, log timestamps, and predefine rules to match device epochs.

4) Sample-size worked example — do the math investors expect (step-by-step)

Scenario: Your wearable detects atrial fibrillation (AF). Investors expect sensitivity ≥ 0.95 with a tight CI. You choose: desired sensitivity Se = 0.95, allowable half-width of 95% CI d = 0.03, Z = 1.96 (for 95% CI).

Compute required number of positive cases (n_pos) using the common normal approximation formula:

-

Z² = 1.96 × 1.96 = 3.8416.

-

Se × (1 − Se) = 0.95 × (1 − 0.95) = 0.95 × 0.05 = 0.0475.

-

Numerator = Z² × Se × (1 − Se) = 3.8416 × 0.0475 = 0.182476.

-

d² = 0.03 × 0.03 = 0.0009.

-

n_pos = Numerator ÷ d² = 0.182476 ÷ 0.0009 = 202.751... → round up → 203 positive cases required.

If AF prevalence in your recruitment pool is p = 5% (0.05), total N needed ≈ 203 ÷ 0.05 = 4,060 participants. That’s why many teams use enriched sampling or case-control cohorts for efficiency, then confirm prospectively in a pragmatic cohort. (Show this math in your data room.) U.S. Food and Drug Administration

5) Statistical analysis plan — what to pre-specify

Pre-specify in the protocol and SAP:

-

Primary analysis: patient-level sensitivity/specificity with exact (Clopper–Pearson) 95% CIs; predefine positive rules (e.g., one-episode length threshold).

-

Continuous measures (HR, BP): Bland–Altman plots with mean bias and 95% limits of agreement; report MAE and RMSE and ICC.

-

Time-series / event detection: episode-level sensitivity, positive predictive value (PPV), false alarm rate per patient-day.

-

Handling of missing data: rules for excluded epochs, imputation approach if any.

-

Subgroup analyses: pre-planned by skin tone, motion level, age brackets, and clinical condition.

-

Interim analysis / stopping rules: if sequential design used (stop early for futility or overwhelming success). Cite sequential diagnostic trial methods when using adaptive designs. Clinical Trial Vanguard

Investors expect you to attach the SAP and to be able to show blinded analysis workflows.

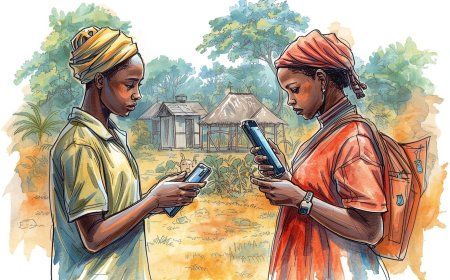

6) Usability & equity testing — don’t be clever, be fair

Investors (and regulators) now expect demonstration that the wearable works across the population you will serve:

-

Skin tone testing: include Fitzpatrick or measured reflectance categories; report performance differences. Recent literature flags oximeter biases across darker skin — treat this proactively. PMC

-

Motion & environment: test during daily living (walking, chores), in hot/humid conditions, with sweat and customary clothing (sleeves, bracelets).

-

Operator studies: if CHWs or nurses will apply the device, test real-user training time, error rates, task success rates, and provide a teach-back protocol.

-

Adherence & wearability: wear time per day, skin irritation reports, battery/swapping friction.

Document all findings and mitigation (firmware filtering, improved strap design, algorithm retraining).

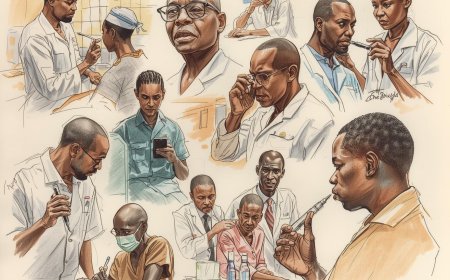

7) Regulatory & standards map — investors want a clear path

Key reference points to list in your data room / protocol:

-

FDA DHT guidance for digital health technologies used to acquire clinical trial endpoints (useful even if you plan other jurisdictions). U.S. Food and Drug Administration

-

STARD for reporting diagnostic accuracy studies and CONSORT-like transparency for diagnostic/endpoint studies. equator-network.org+1

-

ISO 13485 (QMS) and relevant IEC/ISO safety standards (IEC 60601 family for electrical safety; ISO 10993 for biocompatibility; ISO 62304 for software lifecycle; and device-specific standards such as ISO/AAMI for BP). ISO+2Simplexity Product Development+2

-

Local regulators: engage early with PPB/Kenya, SAHPRA/South Africa (or the national regulator in your market) — provide them your protocol and ask for pre-sub review. Early regulatory engagement de-risking is investor-gold. RVO.nl+1

Put your regulatory engagement notes, meeting minutes and agreed deliverables in the data room.

8) Data management, security & privacy — the non-sexy things investors check first

-

Time-synced, tamper-evident logs; secure TLS transport; role-based access audits.

-

Data minimisation, encrypted backups, and an explicit data retention policy.

-

If you plan to commercialize in Kenya or South Africa, align with local data protection laws and national digital health guidance. Document DPO contact and DPIA results. Transform Health+1

Investors expect SOC-2 or ISO/IEC 27001 plans for growth-stage diligence.

9) A copy-ready protocol skeleton investors expect (concise)

-

Title & summary — device name, version, objective (primary endpoint).

-

Background & rationale — unmet need, intended use, benefits.

-

Device description — hardware, firmware, algorithm version, calibration. Freeze software version pre-study.

-

Study objectives — primary/secondary endpoints, non-inferiority/ superiority margins if any.

-

Study design — prospective, multi-site, observational/controlled, inclusion/exclusion.

-

Comparator — exact device/model and adjudication plan.

-

Sample size & justification — include worked numbers (as above).

-

Statistical analysis plan — methods, confidence intervals, subgroup plans, handling of missing data.

-

Usability & equity assessments — instruments and thresholds.

-

Safety monitoring & AE reporting — definitions and timelines.

-

Regulatory & ethical approvals — list planned IRBs/ethics & regulator pre-submissions.

-

Data management & monitoring — CRF/eCRF, data flow diagram, blinding.

-

Quality control & SOPs — operator training, calibration, lot tracking.

-

Timeline & budget — milestone-linked payments and go/no-go gates.

Give this protocol to your preferred clinical partner and your investors will breathe easier.

10) Practical timeline & rough budgets (rule-of-thumb)

-

Analytical verification: 1–2 months, USD $10k–50k (lab costs, bench reagents).

-

Retrospective controlled study: 2–4 months, USD $25k–100k (site/lab fees, sample access).

-

Prospective multi-site validation (1–2 sites): 6–12 months, USD $150k–600k depending on size and country (staff, ECG/Holter rental, monitoring, data management).

-

Real-world deployment study: 6–18 months, variable (scale dependent).

(These are order-of-magnitude figures — prepare a line-item budget per site; investors will ask for burn rate per milestone.) Excedr

11) Common investor gotchas & how to avoid them

-

Gotcha: “You didn’t pre-specify how you’ll handle noisy or missing data.” → Fix: prespecify epoch-exclusion rules and a plausibility filter; run simulations and include them.

-

Gotcha: “Your comparator timing wasn’t exact.” → Fix: synchronise clocks (NTP), log offsets, include time-window matching rules.

-

Gotcha: “No subgroup performance report (skin tone, motion).” → Fix: make this a co-primary exploratory plan and collect reflectance/phototype metrics. PMC

Africa-specific/regulatory note (short)

-

Kenya: engage Pharmacy & Poisons Board / medical devices unit and eHealth guidance early; include local ethical approvals. RVO.nl

-

South Africa: SAHPRA is formalising medical-device regulation — check the Medical Devices Unit and any emergency listing processes if relevant. Early engagement reduces surprises. SAHPRA+1

Document local engagements (emails, minutes) in your data room.

References (APA format with live links)

Cohen, J. F., et al. (2016). STARD 2015: an updated list of essential items for reporting diagnostic accuracy studies. BMJ Open, (STARD). https://www.equator-network.org/reporting-guidelines/stard/. equator-network.org

Canali, S., et al. (2022). Challenges and recommendations for wearable devices in clinical applications. NPJ Digital Medicine. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9931360/. PMC

U.S. Food and Drug Administration. (2024). Digital Health Technologies for Remote Data Acquisition in Clinical Investigations: Guidance for Industry, Investigators, and Other Stakeholders. https://www.fda.gov/media/155022/download. U.S. Food and Drug Administration

International Organization for Standardization. (2016). ISO 13485:2016 — Medical devices — Quality management systems. https://www.iso.org/standard/59752.html. ISO

Wang, L., et al. (2022). Validation of cuffless blood pressure monitoring according to ANSI/AAMI/ISO 81060-2. Journal Article (example study). https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9592900/. PMC

UL. (n.d.). Wearable Technology Testing and Certification. https://www.ul.com/services/wearable-technology-testing-and-certification. UL Solutions

Simplexity Product Development. (2023). IEC 60601 testing: An overview for medical device development. https://www.simplexitypd.com/iec-60601-testing-overview-for-medical-device-development/. Simplexity Product Development

Excedr. (2025). What VCs Look for in Clinical Trials. https://www.excedr.com/blog/what-vcs-look-for-in-clinical-trials. Excedr

Transform Health Coalition. (2024). Legislative Guide: Digital Health Technologies and Data in Kenya. https://transformhealthcoalition.org/wp-content/uploads/2024/03/Legislative-Guide-Digital-Health-Technologies-and-Data-in-Kenya.pdf. Transform Health

SAHPRA. (n.d.). Medical devices. https://www.sahpra.org.za/medical-devices/. SAHPRA

Tang, L. L., Emerson, S. S., & Pritchard, J. (2009). Sample size recalculation in sequential diagnostic trials. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2800369/. Clinical Trial Vanguard

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0