Human–AI Interaction: Designing Alerts Clinicians Trust and Act On

Clinical AI alerts can improve patient safety — or become ignored noise. This APA-style article for an African audience examines why clinicians override or ignore alerts (alert fatigue, poor fit to workflow, low trust), distills human-centered design and governance strategies to create alerts clinicians will trust and act on, and provides practical design patterns, validation methods, and policy recommendations grounded in global evidence and clinical decision-support stewardship. Includes a 10-step checklist and sample alert templates suitable for resource-constrained African health settings.

Abstract

Artificial intelligence (AI) and clinical decision-support systems (CDSS) promise to improve safety and outcomes by generating alerts at the point of care. Yet poorly designed alerts create cognitive load, workflow disruption, and “alert fatigue” — leading clinicians to ignore or override warnings, eroding potential benefit. This article argues that trustworthy, actionable alerts require deliberate human–AI interaction design, clinical-relevance tuning, local validation, governance, and continuous stewardship. We synthesize evidence on why alerts fail, practical design patterns that increase acceptance (relevance, timing, explainability, graded interruptiveness, provenance), and governance practices (audit logs, clinical steering committees, performance dashboards). Recommendations are grounded in international evidence about CDSS stewardship, alert fatigue, and trust in AI and are adapted for African clinical contexts where resource, connectivity, and workforce constraints shape feasible solutions. PMC+2PMC+2

Introduction

Clinical alerts—drug interaction warnings, sepsis risk flags, abnormal-lab notifications, or AI-based imaging prompts—are a core output of CDSS and clinical AI. When designed well they prevent harm; when designed poorly they produce excess interruptions that clinicians learn to ignore. In Africa, where clinical workloads are often high and infrastructure variable, poorly tuned alerts risk becoming another source of wasted clinician time rather than a patient safety tool. To succeed, designers must prioritise trust (do clinicians believe the alert?), actionability (can they act on it quickly?), and fit (does it match workflow and local resource availability?). Evidence-based alert stewardship and human-centered AI design reduce overrides and improve clinical uptake. PMC+1

Why Alerts Fail: The Evidence (Key Failure Modes)

-

Alert overload and fatigue. Excessive volume of low-value alerts desensitizes clinicians, increasing override rates and missed high-value warnings. Studies and reviews show that many interruptive alerts are overridden frequently because they lack clinical relevance or come at inappropriate times. PMC+1

-

Poor relevance to clinical context. Alerts generated without patient- or setting-aware logic (e.g., ignoring local formularies, lab availability, or treatment protocols) feel irrelevant and untrustworthy to frontline staff. ScienceDirect

-

Workflow disruption and timing. Alerts that interrupt a critical task (during procedures, order entry, or emergencies) are more likely to be dismissed. Timing and channel matter (in-EHR popups vs. passive dashboards). PMC

-

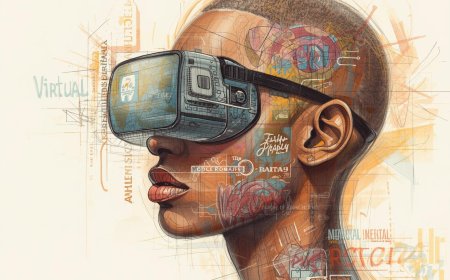

Lack of explainability and provenance. Clinicians need to know why an alert fired — what data triggered it, and how confident the model is — to decide whether to act. Black-box alerts without transparent rationale reduce trust. PMC

-

Poorly calibrated thresholds and low specificity. High false-positive rates (low positive predictive value) create many nuisance alerts; clinicians quickly learn that most alerts do not require action. NCBI

-

Absence of local validation and stewardship. Deployments without local validation, clinician involvement, or post-deployment monitoring degrade over time and across sites. Stewardship programs are needed to maintain and tune alerts. PMC

Principles for Designing Alerts Clinicians Trust and Act On

-

Clinical relevance first — co-design alerts with clinicians to ensure each alert maps to a real clinical decision and a feasible action in that setting. If staff can’t act (e.g., recommended medication not on formulary), the alert is useless.

-

Least-intrusive effective notification — use a graded interruptiveness model: passive (dashboard, in-chart flag) → soft interrupt (non-modal banner) → hard interrupt (modal dialog requiring acknowledgement) only for high-urgency, high-certainty events. Evidence shows semi-automated workflows (triage by pharmacist or nurse) can reduce physician interruptions while maintaining safety. smw.ch

-

Explainability & concise provenance — each alert should include (a) why it fired (data points), (b) confidence or risk score, and (c) recommended next step(s). Short, structured justifications enable quick cognitive checks.

-

High precision and local calibration — tune thresholds for positive predictive value in your local setting. Prioritise specificity for high-volume alerts and sensitivity for time-critical, low-volume events.

-

Context-aware logic — incorporate local clinical practice, formularies, device availability, and patient population into rules or model inputs so alerts align with what clinicians expect and can do.

-

Human-in-the-loop and escalation paths — route low-confidence or policy-sensitive alerts to a second reviewer (pharmacist, senior clinician) and document escalation workflows so clinicians know when to defer.

-

Interrupt only when benefit > cost — implement a measurable harm-reduction threshold before making alerts interruptive; use pilot A/B testing to estimate the net clinical benefit.

-

Transparent governance and stewardship — maintain an alert-stewardship committee (clinicians, informaticians, managers) that reviews alert metrics, overrides, and outcome data regularly to retire low-value alerts and refine thresholds. PMC

-

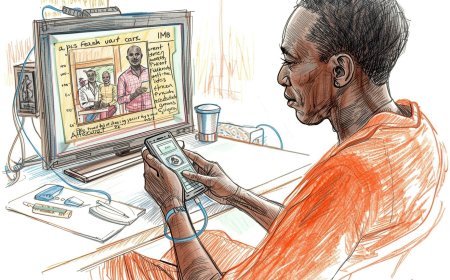

Design for constrained settings — assume variable connectivity, limited specialists, high patient volumes, and mobile-first workflows in many African contexts. Lightweight, SMS-compatible, or offline-capable alert channels can be more practical than full EHR modal dialogs.

-

Train and socialise — invest in short, scenario-based training and rapid feedback loops so users understand alert intent, limitations, and how to respond.

Practical Design Patterns & Templates

A. Graded Alert Pattern (example)

-

Passive flag: Red border and icon in patient header for “Sepsis risk (low confidence) — check vitals.”

-

Soft interrupt (non-modal): Banner during order entry: “Sepsis score moderate (0.6). Consider repeat lactate.” Includes: [Why it fired] [Confidence] [One-line next step] [Remind me later] [Escalate].

-

Hard interrupt: Only when score > 0.85 and clinician is ordering high-risk medication — forces acknowledgement and documents rationale if overridden.

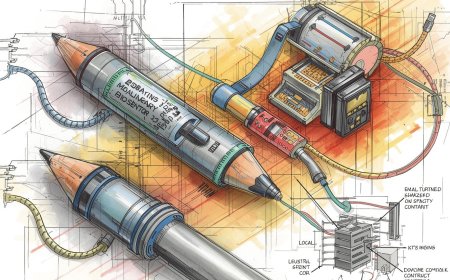

B. Explainable Alert Template (one-line + provenance)

“Acute kidney injury risk triggered by: creatinine rise + urine output drop (last 12 hrs). Risk score 0.78 — recommended: pause nephrotoxic drug X, check creatinine q6h. [Data] [How this score computed] [Accept / Defer—reason required].”

C. Triage + Stewardship Workflow (semi-automated)

-

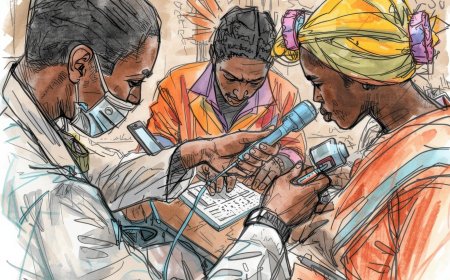

AI model generates candidate alerts into a pharmacist/nurse triage queue.

-

Triage reviewer validates and forwards only high-value alerts to the responsible clinician.

-

All overrides auto-logged and sampled for root-cause review by stewardship committee monthly. Evidence shows such semi-automated paths raise acceptance while lowering physician interruptions. smw.ch

Validation, Monitoring & Research Methods

-

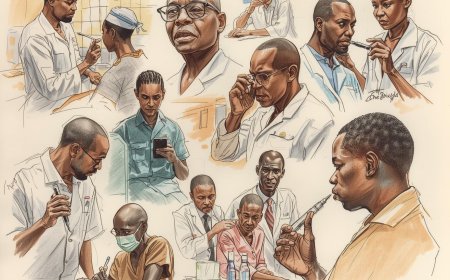

Pre-deployment local validation: measure sensitivity, specificity, PPV, NPV on local retrospective data; report subgroup performance and expected alert volume.

-

Pilot with A/B evaluation: compare clinical outcomes, override rates, and clinician workload between baseline and alert arm. Use run charts and statistical process control to monitor.

-

Override analysis: require short override reasons; sample and audit overrides to distinguish clinically appropriate overrides from nuisance.

-

User feedback channels: embed one-click feedback (“not useful / inaccurate / timing wrong”) and log free-text comments for qualitative analysis.

-

Performance dashboards & alerts about alerts: maintain dashboards showing daily alert counts, acceptance, median time-to-action, and patient outcomes; trigger stewardship review if acceptance falls below thresholds. PMC+1

Governance, Ethics & Policy Considerations

-

Transparent documentation: publish simple model cards and alert datasheets describing intended use, validation results, limitations, and contact person for clinicians. This aligns with WHO guidance for accountable AI in health. World Health Organization

-

Data protection & consent: ensure alerts and data flows comply with national laws and facility policies; anonymise logs used for model retraining.

-

Clinical responsibility: alerts are decision-support, not decision-making — clinical responsibility remains with the treating clinician. Policies should conserve clinician autonomy while making it easy to follow safe recommendations.

-

Equity & access: ensure alert logic does not inadvertently prioritize patients with better documentation (e.g., those with more lab tests) and create formal mitigation plans for under-served groups.

-

Local oversight: create a multidisciplinary steering committee (frontline clinicians, IT, hospital management, and where possible, patient representatives) to approve alert rollouts and govern changes. World Health Organization

Adaptation for African Clinical Contexts

-

Simpler channels: use SMS, USSD, or low-bandwidth dashboards for alerts where EHR coverage is incomplete.

-

Human triage hubs: in settings with few physicians, route alerts to trained clinical officers, nurses, or pharmacists for triage before escalation. Evidence shows semi-automated triage increases acceptance and reduces physician burden. smw.ch

-

Local threshold tuning: calibrate thresholds using local epidemiology (disease prevalence, lab turnaround times) to avoid overwhelm.

-

Task-shifting & task-bundling: design alerts that align with existing task-shifting practices (nurse-led triage, community health worker follow-ups) and connect alerts to feasible next steps.

-

Capacity building: invest in short training sessions and simple job aids that explain how to interpret the alert and the evidence behind it.

Quick 10-Point Checklist (Actionable)

-

Co-design alerts with frontline clinicians and nurses.

-

Define intended clinical action and who will act before building the alert.

-

Choose the least intrusive notification mode that achieves the clinical aim.

-

Include a concise explanation and confidence score with every alert.

-

Locally validate PPV/NPV and estimate daily alert volume pre-deploy.

-

Pilot with A/B testing and measure override reasons and patient outcomes.

-

Implement semi-automated triage for high-volume or disruptive alerts.

-

Maintain an alert-stewardship committee and monthly dashboards.

-

Document alerts with model cards / datasheets and publish limitations.

-

Continuously monitor drift, recalibrate thresholds, and retire low-value alerts. PMC+1

Limitations and Trade-offs

Designing for higher precision may lower sensitivity and vice versa; every setting must choose trade-offs aligned with local priorities (safety vs. workload). Semi-automated triage reduces physician interruptions but requires staffing and coordination. Federated or low-bandwidth implementations limit real-time analytics complexity. Be transparent about these trade-offs and tie alerts to measurable process or outcome goals.

Conclusion

Alerts are powerful human–AI interactions that can save lives when clinicians trust and can act on them. Trustworthy alerts are clinically relevant, minimally intrusive, explainable, locally validated, and governed by continuous stewardship. In African settings, pragmatic adaptations (semi-automated triage, low-bandwidth channels, task-shifting alignment) make these principles feasible. If teams adopt the design patterns and governance steps outlined here, they will reduce alert fatigue, raise acceptance, and deliver safer AI-augmented care.

References

Chaparro, J. D., et al. (2022). Clinical decision support stewardship: Best practices and methods to assess interruptive alerts and maintain safety. Journal / Article. PMC

Wan, P. K., et al. (2020). Reducing alert fatigue by sharing low-level warnings and alert stewardship approaches. Journal / Article. PMC

Asan, O., et al. (2020). Artificial intelligence and human trust in healthcare. Journal / Article. PMC

Dahmke, H., et al. (2023). Tackling alert fatigue with a semi-automated clinical decision-support workflow. Journal / Article. smw.ch

Syrowatka, A., et al. (2024). Computerized clinical decision support to prevent medication errors: rapid review and unintended outcomes including alert fatigue. NCBI / Book Chapter. NCBI

World Health Organization. (2021). Ethics and governance of artificial intelligence for health: WHO guidance. Geneva: WHO. World Health Organization

Park, H., et al. (2022). Appropriateness of alerts and physicians' responses with a clinical decision support system. Journal / Article. medinform.jmir.org

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0