Cost-effective Validation Strategies for Diagnostics Startups: Practical Guide for Africa

Step-by-step, Africa-focused playbook for validating point-of-care diagnostics on a budget. Covers phased validation (analytical → retrospective → prospective → multi-site), smart sample-sizing tricks, piggybacking on surveillance and biobanks, adaptive/sequential designs, partner agreements, QA/EQA, regulatory routes (Kenya example), and ready checklists and templates founders can use to cut cost and time while producing robust evidence. APA citations and live links included.

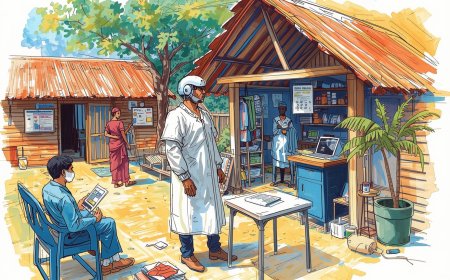

Short brief: You built a slick rapid test — now you need data that buyers, regulators and funders trust. Validation can be expensive, slow and full of surprises. This guide gives founders, product managers and implementation partners a practical, low-budget roadmap to produce defensible evidence for diagnostic accuracy, usability and implementation — with African realities (sample access, lab networks, regulator expectations) front and center. Expect cheeky field anecdotes, a Kenya-flavoured case scenario, worked sample-size math, and concrete templates you can copy into proposals.

Opening anecdote (cheap laugh, serious lesson)

A startup founder once shipped 5,000 test kits to a clinic for a “pilot” — the nurses loved the device, but weeks later lab QA found the positive-control line faded on storage in direct sun. The pilot data were unusable. The founder learned three things fast: (1) validation starts in the lab (analytical stability), (2) pilots must include simple environmental stress tests, and (3) cheap doesn’t mean rushed. Don’t pilot before you validate.

What “validation” must prove (short & practical)

Diagnostics validation usually has four linked aims:

-

Analytical performance — limit of detection, precision, lot-to-lot consistency, stability under local storage.

-

Diagnostic accuracy (clinical performance) — sensitivity / specificity against a reference standard in the intended use population.

-

Usability & robustness — can local health workers use it correctly under field conditions?

-

Implementation feasibility / program utility — does it change decisions / workflows, and is it cost-effective?

You don’t need all four at once — stage them. Early savings come from doing the cheapest rigorous work first (analytical + retrospective accuracy) and delaying large prospective trials until you’ve de-risked the device.

Key reading/guidance: WHO/TDR and FIND materials on evaluating POC tests, plus reporting standards (STARD, QUADAS). tdr.who.int+2finddx.org+2

The phased, cost-conscious validation pathway (recommended order)

-

Bench/Analytical validation (cheap, fast) — 1–2 months

-

Test limit of detection (LOD), precision (repeatability), cross-reactivity and simple environmental stress (heat, humidity, light).

-

Use small panels (20–50 contrived positives at graded concentrations; 20–30 true negatives) to validate basic claims.

-

Outcome: go/no-go decision for clinical testing.

-

Why cheap: uses in-house or partner lab reagents and contrived samples; no patient recruitment.

-

-

Retrospective clinical evaluation (moderate cost) — 2–4 months

-

Use stored or leftover clinical specimens (biobanks, hospital remnant samples) with known reference results.

-

Advantages: faster & cheaper than prospective collection; avoids recruitment logistics.

-

Requirements: ethics approval for secondary use, clear chain of custody, representative sample mix (spectrum of disease, Ct ranges for PCR comparators).

-

Outcome: early sensitivity/specificity estimates and stratified performance by disease severity/viral load.

-

-

Small prospective field study — pragmatic pilot (higher cost) — 3–6 months

-

Enrol consecutive patients in the intended use setting(s) (e.g., outpatient febrile clinic). Use a real reference standard (lab PCR, culture, or composite).

-

Aim for precision on sensitivity/specificity sufficient for go-to-market; consider adaptive designs (see below).

-

Outcome: definitive clinical performance in intended setting and usability observations.

-

-

Multi-site/bridging validation & EQA (scale)

-

Run smaller confirmatory studies across 2–4 representative sites (urban/rural, different operators) and join an External Quality Assessment (EQA) scheme to show consistent product performance. Required for many national and donor tenders.

-

Work with national reference labs for surveillance coupling if possible.

-

Smart cost-saving tactics (practical)

-

Piggyback on existing surveillance or research cohorts

Partner with ongoing surveillance, HIV/TB/NCD cohorts, or outbreak response teams to test stored or incident samples — you gain fast access to well-characterized specimens and metadata. FIND and Africa CDC often support such linkages. finddx.org+1 -

Use remnant/biobank samples for retrospective accuracy

Saved patient samples (with consent/approval) are far cheaper than full prospective recruitment. Ensure storage conditions are appropriate and that specimen age isn’t a confounder. -

Adaptive / sequential designs to reduce sample needs

Use sequential testing strategies that allow early stopping for success or futility — these can cut required enrolment when the device is obviously good or poor (see Tang et al. on sequential diagnostic trials). Adaptive sample recalculation can save time and money. PMC -

Enrich for positives when disease prevalence is low

For rare conditions, use case-control or enriched sampling (more positives) to estimate sensitivity with fewer total samples — but remember this affects predictive value estimates and must be transparently reported (STARD). PMC -

Leverage reference labs & public infrastructure

Negotiate reduced rates or in-kind testing with national reference labs (KEMRI, NICD, NRLs) in return for data sharing or co-authorship. Clinical labs often welcome partnerships that build local capacity. clinregs.niaid.nih.gov+1 -

Batch testing for analytical studies

Run stability & lot-consistency testing in batches, use pooled negative matrices for cross-reactivity checks, and reuse control materials across experiments to reduce reagent waste. -

Use open-source protocols, standard reporting templates (STARD), and QUADAS for study quality

This reduces reviewer pushback and speeds regulatory acceptance. PMC+1

Sample-size planning without breaking the bank — worked example (do the math right)

Key principle: sensitivity/specificity precision drives the number of positives/negatives you need. A common formula for required positives (n_pos) to estimate sensitivity with half-width of CI = d is:

n_pos ≈ (Z² × Se × (1−Se)) / d²

Where Z = 1.96 for 95% CI.

Worked example (step-by-step arithmetic):

-

Desired sensitivity → Se = 0.90.

-

Acceptable half-width of 95% CI → d = 0.05.

-

Z = 1.96.

Compute numerator: Z² × Se × (1−Se) = 1.96² × 0.90 × 0.10 = 3.8416 × 0.09 = 0.345744.

Divide by d²: 0.345744 / 0.0025 = 138.2976 → round up → 139 positives required. PMC

If prevalence in your study population is p = 10% (0.10), total sample size N ≈ 139 / 0.10 = 1,390 participants. That’s why studies often use enriched or retrospective positives to avoid recruiting thousands of participants. If you instead can access a biobank with 150 positives and 300 negatives, you’ve probably got enough power for initial regulatory submissions and to justify a smaller prospective pilot.

(References on diagnostic sample-size calculators and methods: Akoglu 2022; Tang 2009). PMC+1

Design choices that cut cost (with tradeoffs — be explicit)

-

Case-control (enriched) accuracy studies

-

Pros: fewer participants, faster.

-

Cons: overestimates positive predictive value; must later confirm in prospective settings. Report clearly per STARD. PMC

-

-

Retrospective remnant sample study

-

Pros: cheap, fast.

-

Cons: spectrum bias risk (stored positives often severe cases). Use stratified analyses by Ct/biomarker levels. tdr.who.int

-

-

Sequential/adaptive prospective trials

-

Pros: can stop early saving time and money.

-

Cons: requires upfront statistical plan and interim analysis capacity. Cite Tang et al. for methods. PMC

-

-

Pooling samples for LOD/analytical work

-

Pros: saves reagents.

-

Cons: not appropriate for clinical accuracy estimates.

-

-

Use composite reference standards when gold standard impractical

-

Pros: makes real-world evaluation feasible.

-

Cons: introduces complexity in interpretation; must be justified and sensitivity analyses performed.

-

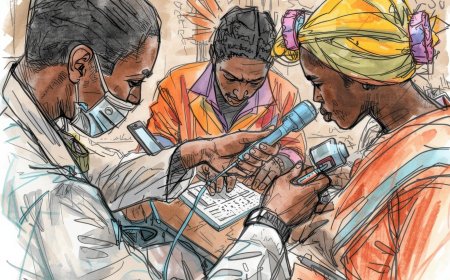

Usability & field robustness testing — small investments, big returns

A diagnostic that performs brilliantly but is misread in the clinic is worthless. Do short, cheap usability cycles:

-

Observe 10–20 typical users (nurses, CHWs) doing the test unprompted — record error types and time-to-result. Iterate 2–3 design fixes.

-

Simple environmental stress tests: 7–14 day exposures at local temperature/humidity ranges; run a small panel to detect loss of performance.

-

Operator variability: have multiple cadres (lab tech, nurse, CHW) run the same samples to quantify operator effect.

Usability failures are cheap to fix early and save huge costs later in field validation.

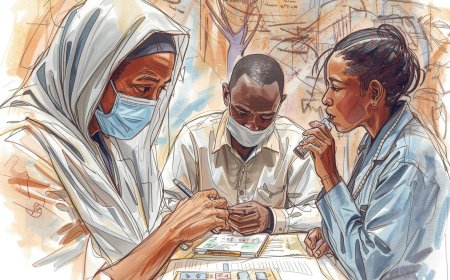

Regulatory & reporting: get it right the first time (Kenya example + general notes)

-

Kenya: The Pharmacy & Poisons Board publishes guidelines for registration of medical devices including IVDs — engage PPB early and follow their dossier checklist for clinical evidence and manufacturing QA. Clinical research involving human subjects also requires NACOSTI/ethics clearance and PPB notification. Use ClinRegs Kenya for up-to-date regulatory steps. web.pharmacyboardkenya.org+1

-

WHO PQ & donor markets: If you target large procurement (Global Fund, Unitaid) you’ll likely need WHO Prequalification (or SRA equivalence). PQ expectations now include clearly documented analytical work, prospective clinical accuracy across intended settings, and robust QMS (ISO 13485). PQ processes have recent updates and support for African manufacturers — engage PQ teams early. WHO Extranet+1

-

Reporting standards: Use STARD 2015 for reporting diagnostic accuracy and QUADAS for internal study quality assessment — they make peer review and regulator reviews much smoother. PMC+1

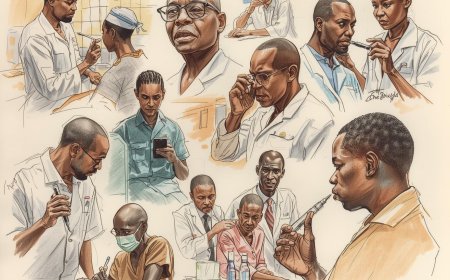

Partnerships that dramatically lower cost (who to call)

-

National reference labs (KEMRI, NICD, NRLs) — for comparator testing and reduced-cost PCR runs. clinregs.niaid.nih.gov+1

-

FIND / WHO / TDR — technical support, potential specimen access and sometimes financial support for evaluation work. FIND often runs multi-site validations and can advise on study design. finddx.org+1

-

Academic hospitals / surveillance programs — piggyback prospective recruitment during busy seasons (flu season, malaria peaks).

-

Biobanks & existing studies — negotiate access to stored, well-characterized samples (HIV/TB cohorts, febrile illness studies).

-

Regional EQA providers & Africa CDC networks — to demonstrate ongoing lot performance and participation in proficiency testing. Africa CDC

Formalize partnerships with MoUs that specify specimen access, data ownership, publication rights and cost-sharing.

Minimum documentation & data package (what funders/regulators want)

-

Analytical protocols and raw data (LOD, precision, cross-reactivity).

-

Retrospective accuracy report with STARD checklist.

-

Prospective study protocol, sample size justification and statistical analysis plan (SAP).

-

Usability test reports and photographs of IFUs / packaging.

-

Lot-to-lot consistency data and stability testing.

-

QMS evidence: SOPs, supplier qualifications, batch records.

-

Data management plan, anonymization & consent forms (per national DPA/regulator).

Assemble this as a single submission folder for regulators and partners.

A 6–9 month cost-conscious validation timeline (practical)

Month 0–1: Analytical bench work (LOD, cross-reactivity, stability); finalize SAP.

Month 2–3: Retrospective study on biobank/remnant samples (n≈200–500 depending on disease); submit STARD-formatted report.

Month 4–6: Small prospective adaptive pilot (target positives based on sample-size calc; use sequential stopping rules). Usability testing runs in parallel.

Month 7–9: Multi-site bridging & EQA enrolment; finalize regulatory dossier and submit to national regulator / WHO PQ pre-consultation.

This timeline is ambitious — contracting, ethics, cold chain and site setup can introduce delays. Mitigate by lining up partners and ethics approvals early.

Quick practical checklists (copy-paste into your project plan)

Pre-study (must-do)

-

Analytical SOPs written & controls prepared.

-

Ethics & regulator pre-notified; MoUs with partner sites signed.

-

STARD checklist and SAP drafted.

-

Supply chain: stable control materials & spare kits.

-

Data system: CRF/eCRF and database ready.

Retrospective study essentials

-

Specimen list with reference method results and Ct/quantitative metadata.

-

Chain of custody & storage condition logs.

-

Blinding procedures (operator masked to reference results).

Prospective study essentials

-

Consecutive patient enrolment SOP.

-

Adverse event reporting & referral SOP.

-

Operator training logs & usability assessment forms.

-

Interim analysis plan & stopping rules (if adaptive).

Regulatory dossier

-

Analytical data, clinical reports (STARD), stability data, QMS documents.

-

Labelling, IFU, packaging photos and translations.

-

EQA enrolment proof and post-market surveillance plan.

Short Kenya case vignette (realistic scenario)

A Nairobi medtech startup partnered with a county referral hospital and KEMRI to validate an Ag-RDT for a febrile pathogen. They: (1) performed bench LOD/stability tests in month 1, (2) ran a retrospective study on 220 stored PCR-positive samples (sourced from a previous fever study) in months 2–3, and (3) ran a small adaptive prospective pilot in two outpatient clinics (enrolling 450 patients over months 4–6). By piggybacking on an existing cohort and using sequential stopping rules, they reduced total cost by ~40% versus a full naive prospective design and got actionable evidence for the Pharmacy & Poisons Board submission. (This is a composite scenario reflecting approaches encouraged by FIND and local lab partnerships.) finddx.org+1

Final tactical tips (short bullets)

-

Validate early and cheaply on contrived and stored samples; only escalate to expensive large trials after passing early gates.

-

Use adaptive designs and enrichment to avoid recruiting thousands prematurely.

-

Build formal MoUs with national labs and surveillance programs before you need samples.

-

Document everything to STARD/QUADAS standards — sloppy reports cost time and credibility. PMC+1

References (APA — live links)

-

FIND. (2025). FIND — Diagnosis for all. https://www.finddx.org/. finddx.org

-

World Health Organization / TDR. (n.d.). A guide to aid the selection of diagnostic tests. https://tdr.who.int/home/our-work/global-engagement/BLT.16.187468. tdr.who.int

-

Bossuyt, P. M., Reitsma, J. B., Bruns, D. E., Gatsonis, C. A., Glasziou, P. P., Irwig, L. (2015). STARD 2015: an updated list of essential items for reporting diagnostic accuracy studies. BMJ. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5128957/. PMC

-

Whiting, P. F., Rutjes, A. W., Westwood, M. E., Mallett, S., Deeks, J. J., Reitsma, J. B., Leeflang, M. M. (2006). QUADAS: a tool for the quality assessment of studies of diagnostic accuracy included in systematic reviews. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1421422/. PMC

-

Akoglu, H. (2022). User’s guide to sample size estimation in diagnostic accuracy studies. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9639742/. PMC

-

Tang, L. L., Emerson, S. S., & Pritchard, J. (2009). Sample size recalculation in sequential diagnostic trials. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2800369/. PMC

-

World Health Organization. (2024). WHO Prequalification of In Vitro Diagnostics — PQDx updates. https://extranet.who.int/prequal/sites/default/files/document_files/day2_session5.1_pqt-ivd_update2024.pdf. WHO Extranet

-

Pharmacy & Poisons Board (Kenya). (n.d.). Guidelines for Registration of Medical Devices Including In-Vitro Diagnostics. https://web.pharmacyboardkenya.org/download/guidelines-for-registration-of-medical-devices-including-in-vitro-diagnostics/. web.pharmacyboardkenya.org

-

ClinRegs (NIH/NIAID). (2025). Clinical Research Regulation — Kenya. https://clinregs.niaid.nih.gov/country/kenya. clinregs.niaid.nih.gov

-

Africa CDC. (2025). Decentralize diagnostics and accelerate outbreak response. https://africacdc.org/news-item/africa-launches-continental-strategy-to-decentralize-diagnostics-and-accelerate-outbreak-response/. Africa CDC

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0