Measuring Impact: Evaluation Frameworks for CHW Tech Interventions

This APA-style article for an African audience explains how to design, run, and report rigorous evaluations of community health worker (CHW) technology interventions. It compares evaluation frameworks (RE-AIM, MRC complex-intervention guidance, WHO digital-health M&E, CHW performance frameworks), recommends mixed-methods designs and practical indicators, gives step-by-step evaluation roadmaps, cost and timeline guidance, and a 12-point operational checklist implementers, funders, and ministries can use to produce decision-grade evidence for scale.

Abstract

As mobile and digital tools become routine in CHW programmes across Africa, rigorous and practical impact measurement is essential to judge value, guide scale-up, and protect patients. This article synthesises leading evaluation frameworks—RE-AIM, the WHO Monitoring & Evaluation guidance for digital health, the Medical Research Council (MRC) framework for complex interventions, and CHW-specific performance frameworks—and shows how to apply them to CHW tech interventions (apps for case management, referrals, supervision, training, and payments). We provide study designs (from rapid programme audits to stepped-wedge trials), a priority indicator set, data sources, mixed-methods approaches for attribution and process understanding, ethical and data-governance considerations, cost and timeline guidance, and a practical evaluation roadmap and checklist tailored to resource-constrained African settings. PMC+3World Health Organization+3re-aim.org+3

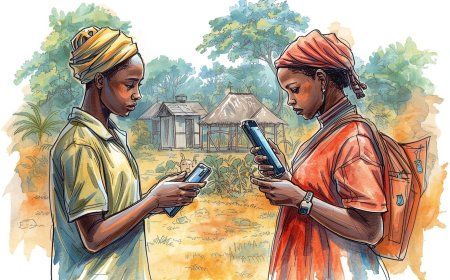

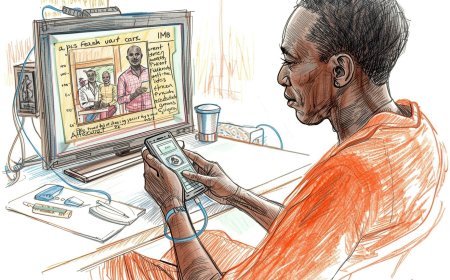

Introduction: why strong evaluation matters for CHW tech in Africa

Digital platforms for CHWs promise better guideline adherence, faster referrals, improved surveillance, and supervisor support. But digital promise is context-dependent: devices, connectivity, supervision, incentive structures, and local workflows shape outcomes. Hard evidence — not anecdotes — drives procurement by ministries, donor funding, and sustainable scale. Evaluation should therefore answer three core decision questions: (1) does the intervention produce meaningful health or system outcomes (effectiveness)? (2) can it be adopted and implemented at scale with quality (implementation & adoption)? and (3) is it affordable and sustainable (costs, maintenance, and equity)? Use established frameworks to structure these questions and select methods that balance rigor, feasibility, and timeliness. World Health Organization+1

Frameworks you can use — what they bring and when to use them

1. RE-AIM (Reach, Effectiveness, Adoption, Implementation, Maintenance)

RE-AIM provides a concise, implementation-focused lens that maps directly to programme impact at population scale: who the intervention reaches, whether it works, whether organisations adopt it, how well it’s implemented (fidelity/cost), and whether effects are sustained. RE-AIM is particularly useful when implementers must present scale-relevance to ministries and funders. re-aim.org+1

2. WHO Monitoring & Evaluating Digital Health Interventions (M&E guide)

WHO’s guidance gives practical, domain-specific advice—differentiating monitoring vs impact evaluation, suggesting core indicators for digital health (uptime, user satisfaction, fidelity), and emphasising iterative learning, process evaluation, and equity. Use WHO guidance to align your M&E with global norms and to pick digital-specific metrics (sync rate, crash rate, time-to-sync). World Health Organization

3. MRC / NIHR Framework for complex interventions

When the CHW tech intervention is part of a broader system change (task-shifting, payment reform, referral networks), treat it as a complex intervention. The MRC guidance recommends a programmatic sequence: development and theory of change, feasibility/piloting, full evaluation (including process evaluation), and implementation research for scale. It’s ideal when causal attribution and mechanism understanding are priorities. BMJ

4. CHW-specific performance & programmatic frameworks (e.g., CHW AIM)

CHW AIM and related frameworks provide indicators and governance questions tailored to CHW programmes (supervision quality, commodities, training, community engagement). Combine CHW-specific measures with digital M&E to capture both “health system” and “technology” dimensions. PMC+1

Recommendation: blend frameworks. Use RE-AIM for concise reporting to decision-makers, WHO digital-health M&E for technical digital indicators, MRC for study sequencing and causal inference, and CHW frameworks for workforce performance and sustainability metrics. re-aim.org+1

Core evaluation questions (mapped to frameworks)

-

Who do we reach? (RE-AIM Reach — denominator and equity)

-

Does the tech improve clinical process or health outcomes? (RE-AIM Effectiveness; MRC outcome evaluation)

-

Do clinics/CHW supervisors adopt and integrate the tool? (RE-AIM Adoption)

-

How well is it implemented — fidelity, dose, technical performance? (RE-AIM Implementation; WHO M&E)

-

Is benefit sustained and affordable? (RE-AIM Maintenance; cost-effectiveness)

-

Why did it succeed or fail? (Process evaluation per MRC: mechanisms, context)

-

Are there unintended harms or equity concerns? (Cross-cutting; WHO & CHW AIM). re-aim.org+1

Recommended study designs — tradeoffs and fit for purpose

-

Rapid programme audit / time-series (quick, operational): monitor pre-post trends of key process metrics (antibiotic prescriptions, referral completion) with interrupted time series (ITS) where longitudinal data exist. Fast and useful for operational decisions; limited causal certainty. (Good for early pilots.) World Health Organization

-

Before–after with matched controls / quasi-experimental (moderate rigor): difference-in-differences (DiD) or synthetic controls when randomisation infeasible. Better attribution than simple pre–post. PMC

-

Stepped-wedge cluster randomised trial (high rigor, practical for phased rollouts): staggered roll-out across districts provides strong causal evidence and is often politically and operationally feasible during scale-up. Includes embedded process evaluation and cost data. BMJ

-

Cluster randomised trial (gold standard but resource-heavy): use when high internal validity is essential and scale logistics permit. Best paired with mixed-methods process evaluation. BMJ

-

Implementation research / hybrid effectiveness-implementation designs: concurrently evaluate clinical outcomes and implementation outcomes (acceptability, feasibility). Highly policy-relevant. International Journal of Integrated Care

Practical rule: choose the most rigorous design feasible given budget, rollout plans, and data systems. For many CHW tech pilots, a stepped-wedge or hybrid design with embedded process evaluation provides the best balance of rigour and policy relevance. BMJ+1

Key indicators (prioritised, practical set)

Indicators are grouped by RE-AIM + CHW program function. Pick a small core set (8–12) for routine reporting and a larger set for periodic evaluation.

Reach & equity

-

% of target households visited in period (coverage, by wealth quintile / region).

-

% of eligible population who received service via CHW app (disaggregated by sex/age/location). re-aim.org

Effectiveness (process & clinical outcomes)

-

% guideline-adherent assessments (e.g., correct IMCI classification).

-

% completed referrals that resulted in facility visit within X days.

-

Appropriate antibiotic prescription rate (or reduction in inappropriate antibiotics).

-

Selected health outcome where measurable (e.g., facility deliveries, timely malaria treatment). PMC

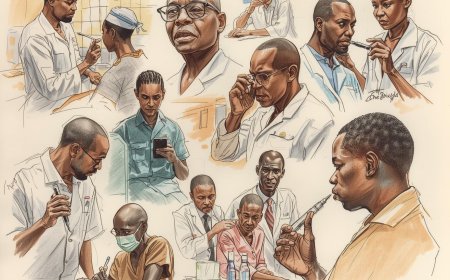

Adoption & acceptability

-

% CHWs actively using the app (daily/weekly active users).

-

Supervisor adoption: % supervisors using dashboard for mentoring.

-

User satisfaction scores (CHW & patient short surveys). World Health Organization

Implementation & fidelity

-

App uptime / sync success rate; average time per consult in app.

-

% of visits with complete mandatory fields (data quality).

-

Fidelity score from observed visits (OSCE-style checklist). World Health Organization

Maintenance & costs

-

Retention of CHWs using the system at 6 / 12 months.

-

Cost per consult and budget impact; projected per-facility operating costs at scale. BMJ

Safety & unintended consequences

-

Incidents reported linked to app guidance (adverse events).

-

Evidence of data misuse or privacy breaches (audited). World Health Organization

Mixed-methods package — how to combine quantitative and qualitative

-

Routine digital logs + dashboard metrics (quantitative, high frequency)

-

Use app analytics for adoption, use intensity, error rates, and geo-tagged coverage. Good for monitoring and triggering supervisory action.

-

-

Health-system administrative data & facility records

-

For referrals, admissions, commodity use, and broader impact metrics. Use ITS or DiD when available.

-

-

Targeted surveys (CHWs & service users)

-

Short mobile surveys for satisfaction, equity, and recall of key messages.

-

-

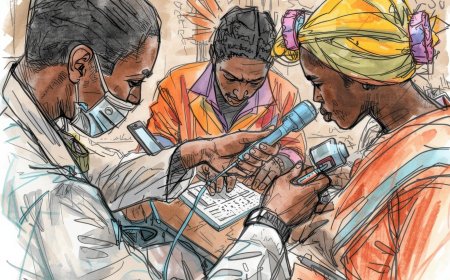

Direct observation & clinical vignettes

-

OSCEs or observed visits to measure fidelity and competence.

-

-

Qualitative methods

-

Key-informant interviews with supervisors, focus groups with CHWs and community members, and rapid ethnography to understand contextual barriers to adoption.

-

-

Process evaluation (mechanisms)

-

Map the theory of change and use mixed methods to explain how and why outcomes occurred (or did not). MRC guidance emphasises process evaluation alongside outcome measurement. BMJ+1

-

Data quality, attribution, and bias mitigation

-

Triangulate: use at least two independent data sources for key outcomes (app logs + facility registers + CHW survey).

-

Audit samples: randomly select visit records and verify on the ground (supervisor spot checks) to estimate data-entry error rates.

-

Pre-registration & analysis plan: pre-register evaluation design and primary outcomes when feasible to reduce selective reporting.

-

Adjust for secular trends: use control areas or ITS to account for seasonality or other programs.

-

Handle missing data: document reasons (connectivity vs non-use) and use appropriate imputation or sensitivity checks. World Health Organization

Economic and affordability analysis

Include a basic budget-impact and cost-effectiveness component:

-

Micro-cost the intervention: devices, training, supervision, hosting, data bundles, and maintenance.

-

Estimate per-consult costs at current scale and projected costs at scale (economies of scale).

-

Budget impact: net cost (or savings) to the buyer over 1–5 years (include changes in commodity use, referrals, admissions avoided).

-

(Optional) CEA: cost per DALY averted or cost per quality-adjusted life year (QALY) if health outcomes measurable and data available. BMJ

Ethics, data governance, and safety monitoring

-

Informed consent: adapt consent to CHW workflows—community information, opt-out for routine service data where national law permits, explicit consent for research components.

-

Data protection: follow national data protection laws; apply WHO digital-health guidance for minimal data collection and encryption in transit and at rest.

-

Adverse event reporting: integrate digital-related incidents into facility incident reporting; create escalation protocols.

-

Equity safeguards: pre-specify subgroup analyses (gender, remote/urban, poorest quintiles) and mitigation plans if inequities emerge. World Health Organization

Practical evaluation roadmap & timeline (6–18 months example)

Phase 0 (0–2 months): Define evaluation questions, theory of change, core indicators, data sources; secure approvals.

Phase 1 (2–4 months): Baseline data collection (app logs pre-deploy, facility registers, CHW surveys), finalize data collection tools.

Phase 2 (4–10 months): Rollout with monitoring—collect routine metrics, early process evaluation (qualitative), and midline OSCEs.

Phase 3 (10–14 months): Primary outcome measurement (endline), economic data collection, in-depth qualitative follow-up.

Phase 4 (14–18 months): Analysis, sensitivity analyses, stakeholder validation workshop, policy brief and dissemination. World Health Organization+1

Sample short evaluation plan (one-page schematic)

-

Objective: Measure whether CHW app increased guideline-adherent case management of under-5 fevers.

-

Design: Stepped-wedge cluster trial across 12 sub-counties; embedded process evaluation.

-

Primary outcome: % of febrile children correctly classified & managed per IMCI at 12 months.

-

Secondary outcomes: antibiotic prescription rate, referral completion, CHW active use rate, cost per consult.

-

Data sources: app logs, facility registers, direct observation (OSCE), caregiver exit surveys, programme cost ledger.

-

Analysis: Intention-to-treat mixed-effects regression; process analysis to explore mechanisms (qualitative + fidelity scores).

-

Ethics & governance: district ethics approval, data-sharing agreement, adverse-event SOP. BMJ+1

12-Point operational checklist (ready to use)

-

Write a concise Theory of Change that links digital inputs → CHW behavior → system outcomes.

-

Select 8–12 core indicators mapped to RE-AIM and CHW AIM (mix of digital, clinical, and programmatic). re-aim.org+1

-

Choose the most rigorous feasible study design (stepped-wedge preferred for phased rollouts). BMJ

-

Pre-register primary outcomes and analysis plan where possible.

-

Build or verify data pipelines: app logs → cleaned datasets → dashboards.

-

Budget for routine data audits and ground truth validation (spot checks).

-

Embed process evaluation (qualitative teams) from the start. International Journal of Integrated Care

-

Micro-cost the intervention for budget impact and CEA inputs.

-

Define equity subgroups and pre-specify subgroup analyses.

-

Secure ethical approvals and data governance agreements early. World Health Organization

-

Plan stakeholder validation workshops for interpreting findings and policy translation.

-

Prepare short, buyer-focused outputs: 1-page policy brief (ministry), 1-page operational brief (facility), and technical annex (methods & data). World Health Organization

Limitations and realistic expectations

-

Small pilots rarely provide definitive evidence on population health outcomes; use them to measure proximal process changes and to parameterise models.

-

Data quality remains the Achilles’ heel—invest in audits and human verification.

-

Evaluations demand resources—embed evaluation budget into implementation from the start.

-

Attribution will often require quasi-experimental designs if randomisation is infeasible; be candid about uncertainty. World Health Organization+1

Conclusion

Measuring the impact of CHW technology interventions requires combining practical, digital-specific monitoring with rigorous evaluation methods that answer policymakers’ questions about effectiveness, adoption, fidelity, equity, and affordability. Use RE-AIM for clear scale-focused reporting, WHO M&E guidance for digital health metrics, MRC for sequencing complex-intervention research, and CHW-specific frameworks to capture workforce sustainability. A mixed-methods stepped-wedge or hybrid design with embedded process evaluation and economic analysis will usually deliver the evidence that ministries, funders, and implementers need to decide on scale. Commit to transparent documentation, routine data audits, and stakeholder engagement to translate evidence into policy and practice. re-aim.org+2World Health Organization+2

References

Medical Research Council (MRC). (2021). A new framework for developing and evaluating complex interventions (Skivington et al.). BMJ. BMJ

World Health Organization. (2016). Monitoring and evaluating digital health interventions: a practical guide to conducting research and assessment. WHO. World Health Organization

RE-AIM. (n.d.). What is RE-AIM? Retrieved from RE-AIM website. re-aim.org

Agarwal, S., Kirk, K., & Sripad, P. (2019). A conceptual framework for measuring community health workforce performance within primary health care systems. Human Resources for Health. PMC

Rathod, L., & colleagues. (2024). Process evaluations for the scale-up of complex interventions: practical guidance. International Journal of Integrated Care. International Journal of Integrated Care

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0